By Jair Ricardo de Moraes (ERNI Germany)

In today’s fast-paced business world, companies are increasingly turning to data science and machine learning (ML) to gain a competitive advantage. The key to the success of these projects is robust requirements engineering (RE). Mistakes made here result in misleading data conclusions that can lead to expensive bad business decisions. To avoid this, our agile RE service, combined with our data and ML expertise, can unlock the full potential of your project.

The importance of agile RE

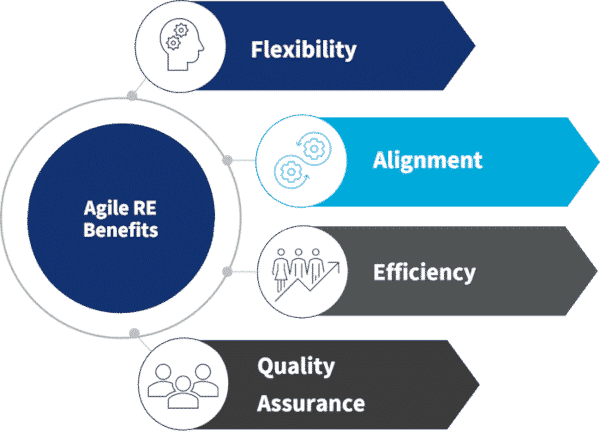

Data and ML projects are complex and iterative, have ever-evolving objectives and carry an extra layer of uncertainty. Requirements may need to be reconsidered more often, and it is not always clear how many iterations will be needed to develop a suitable model that effectively addresses the business problem. Without a well-defined, adaptable approach, iterations can quickly become derailed. This is where agile RE comes into play, to keep projects on track based on flexibility, alignment, efficiency, and quality assurance.

Flexibility allows changes to be made throughout the project as new insights emerge or business goals shift.

Alignment ensures that everyone understands and agrees on project goals and requirements, reducing the risk of miscommunication.

Efficiency comes by asking the correct questions in the appropriate way upfront. This allows the project to be broken down into manageable components, which helps in setting clear expectations and identifying potential challenges, allowing teams to prioritise tasks, allocate resources effectively and reduce the overall project workload.

Quality Assurance helps to uncover and mitigate issues early in the project lifecycle, focusing on constant feedback and testing. This is where the role of MLOps (Machine Learning Operations) becomes crucial. MLOps, a fusion of DevOps and Data/AI (Artificial Intelligence), equips us with a suite of best practices, tools and workflows for creating software that integrates AI models and Data Science. It allows the tracking of data pipelines, management of data versions, versioning of models, testing, deployments, etc.

Business case: Device maintenance prediction

A company specialised in producing industrial 3D printers asked for our help with the optimisation of the predictive maintenance of these devices, located worldwide, using alarms based on usage patterns and performance data. Their device customers were facing unpredictable downtime that affected their process efficiency. Finding a solution to this issue could lead to a market advantage and increase the competitiveness of their devices without the need to change and re-certify them.

The primary challenge was to design a model that could accurately predict the need for maintenance, thereby reducing downtime and increasing device efficiency. Big data and different data analysis techniques such as statistics models and ML were used to predict and detect failures. The ML model was able to generate predictive or threshold-based events and alarms which are used to plan for predictive maintenance that optimises the printing process.

Phases of RE

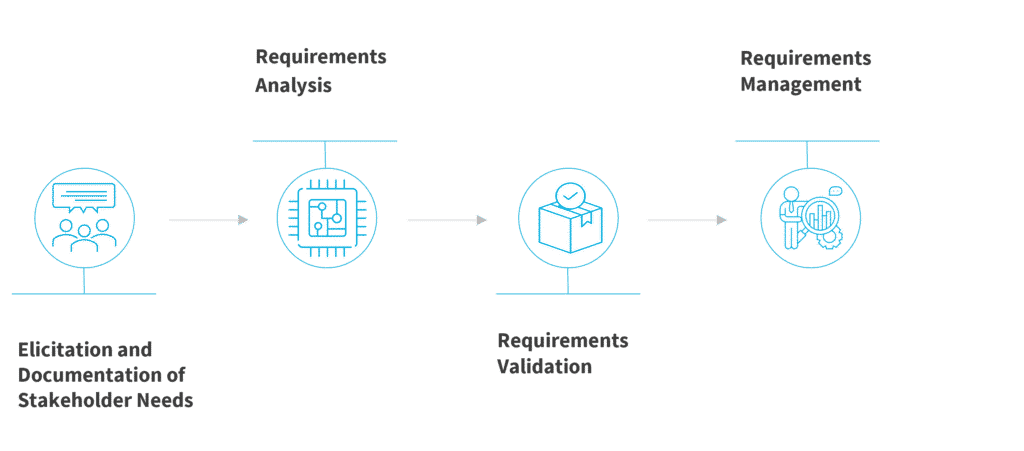

In this context, in collaboration with our customer team, our team of experts created a requirements engineering plan, which facilitated the full-cycle development of the ML model, encompassing the following stages:

Elicitation and documentation of stakeholder needs based on a deep stakeholder analysis, including device users and device maintenance technicians. Surveys and workshops were conducted to gather their needs, expectations and constraints.

Requirements analysis and documentation by using the MoSCoW method to prioritise requirements into ‘Must-have’, ‘Should-have’, ‘Could-have’ and ‘Won’t-have’. This prioritisation helped manage potential conflicts between them and align them with the project’s scope and timeline. UML (Unified Modelling Language) models were utilised to effectively illustrate the requirements and provide comprehensive insights into the associations between the entities involved in the project.

Requirements validation using the technique of review and inspection. The stakeholders were involved in reviewing the requirements, and their feedback was incorporated to ensure that the final set of requirements accurately reflected their needs and expectations.

Requirements management as the last step in the RE process. This involved managing the requirements throughout the project lifecycle. The traceability concept was applied to track changes to the requirements and assess their impact on the project.

The Impact of RE

The systematic elicitation, documentation, validation and management of requirements ensured that the ML model was tailored to meet the stakeholders’ needs. During the development phase, for instance, the ML model was trained using a large dataset of device usage patterns and performance data, as specified in the ‘Must-have’ requirements. The model’s performance was continually evaluated and fine-tuned to ensure it met the ‘Should-have’ requirements. The RE process also helped to manage changes effectively. When new device variants were introduced in the ML model, the impact of this change on the project was assessed using the traceability concept, and the model was updated accordingly.

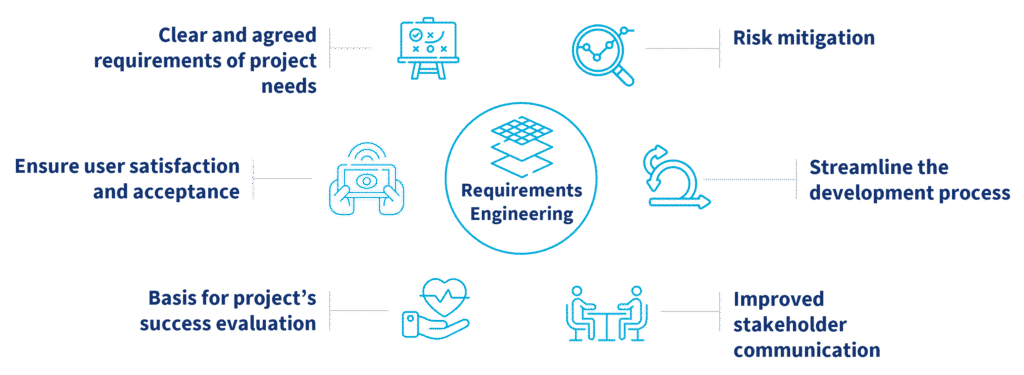

The RE approach employed in this project helped our customer:

- to understand the project requirements in a clear and precise manner. This ensured that the ML model to predict the maintenance needs of the 3D printing devices was built and trained according to the project’s specific demands.

- to identify potential risks at an early stage of modelling. This included risks related to data quality, model performance and stakeholder expectations. Early identification of these risks allowed mitigation strategies to be put in place.

- to streamline the development process. By defining requirements in advance, the team could work more efficiently.

- to improve communication with different stakeholders (project managers, developers, and end users). A well-defined set of requirements ensured everyone was on the same page about what the ML model should do, avoiding misunderstandings.

- to provide a basis for evaluating the success of the ML predictive maintenance project. By comparing the final model with the initial requirements, stakeholders could assess whether the project had met its objectives.

- to ensure that the ML model met the needs of its users and enhanced their satisfaction and acceptance of the model.

What to consider when starting a data and ML project

The success of ML projects hinges on having professionally defined data requirements. The step of identifying relevant, high-quality data sources that accurately depict the problem being addressed is fundamental. The data utilised should be current, clean and suitable for model training. The quality of this data has a substantial influence on the ML model’s accuracy and performance. By establishing clear requirements for the data used, we can ensure the model is effectively trained to yield accurate predictions and trustworthy results. The procedure of engineering data, encompassing the evaluation, interpretation and further processing of data, is a key determinant. Therefore, the expertise of requirements engineers plays a significant role in the success of ML projects.

Besides tools, model selection, deployment, and maintenance, the determining factors in these projects are:

- stakeholder engagement

- scientific approach

- understanding the business context

- clear definition of the problem

Each one is an integral part of agile RE that offers organisations more flexibility and means that a project’s start does not have to be postponed until everything is clear and fully documented. Consequently, smart and reliable answers to important business questions are given faster.

ERNI has proven its competency in various projects around the globe. As your potential long-time partner with Swiss quality and reliability, we cover the whole lifecycle of projects with subject-matter experts for every role in data and ML projects.