by Sándor Zolta Székely Sipos (ERNI Romania)

Release notes are frequently long, detailed and challenging for users to navigate. This article presents how a GenAI-supported method can deliver clearer, more targeted release information by tailoring content to different audiences, drawing on insights gained from an internal proof of concept.

Background/problem statement

One of our customers proposed a proof of concept to generate user-friendly release notes for their application using GenAI. Their existing release notes documentation exceeded 500 pages and contained extensive, highly detailed descriptions for each user story. Every user received the full document, even though large sections were irrelevant to their role. As a result, users struggled to find the information most important to them, and reviewing release notes was time-consuming and inefficient.

The idea was part of an innovation sprint in the SAFe framework our customer followed during the development of their solution, but the initiative was cancelled. Given the clear potential and strong fit for GenAI, the concept was taken forward through an independently developed prototype to demonstrate how such an approach could effectively address the challenges observed.

Approach and tool exploration

Around the same time, at an AI conference in Cluj, Langflow was encountered – an open-source visual environment for building AI agent workflows using drag-and-drop components such as LLMs, RAG pipelines, and tools. While initially promising, the learning curve proved more steep than expected, and customisation introduced limitations that slowed progress.

Given these constraints, experimentation shifted to dify.ai, a low-code/no-code platform for building and running AI workflows, agents and applications. It supports both cloud and self-hosted setups, offers integrated retrieval pipelines, configurable model providers, versioned prompting and built-in support for collaboration. In short, it enabled a much faster progression from idea to working prototype.

Building the prototype

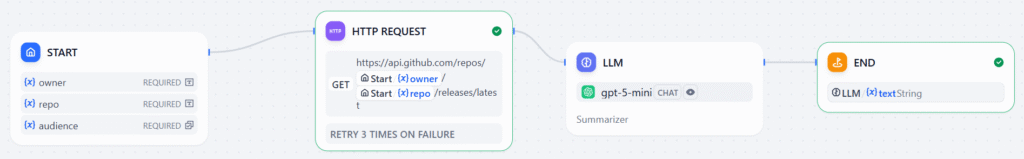

Using dify.ai’s workflow builder, a simple application was created where a user can:

- Enter the GitHub repository name (owner + repo)

- Select the target audience (end users, developers or marketing)

The workflow then:

- Retrieves the release notes for the latest GitHub release

- Passes the content to an LLM along with prompts tailored to a specific audience

- Outputs a concise, audience-appropriate summary

Components:

- START – define input fields (owner, repo, audience) and next step

- HTTP REQUEST – GitHub authorisation API key, headers, params, body, SSL certificate verification, output variables, retry on failure (max retries, retry interval), error handling, next step

- LLM – model (Dify supports several model providers), context (response of the HTTP request in previous step), system (high-level instructions to the conversation), user (instructions, queries or any text-based input to the model; can use variables defined in previous steps), API key, next step

- END – here, the end and result type of the workflow is defined

Each individual step can be tested separately or in integration with other steps.

When published, based on Dify deployment type, the app will be accessible in the cloud or on-prem.

The app is stored as DSL, and the whole workflow is very light; basically, the definition of the app is a YAML file. After being published, the shared Dify runtime (web + API + workers) interprets that config at request time.

The proof of concept was a Next.js single-page web app, a hosted UI either in the cloud or in its own domain on self-host, but API access (HTTP endpoints + API keys), which can be called from its own code or batch run/MCP server, is also supported.

Key findings

Initial tests with the cloud-hosted version of dify.ai and ChatGPT produced encouraging results after some tuning of parameters such as temperature (ChatGPT 4.1) and max tokens (ChatGPT 4.1 and 5 Mini). Costs were monitored during development, and they remained low.

Experimenting with local models

To pursue better data privacy and cost-effectiveness, the setup was migrated to a local installation of dify.ai using Docker and connected to Ollama models hosted directly on a company notebook.

Without a dedicated GPU, the prototype was limited to smaller models like phi3:mini and llama3:8b (which already ran noticeably slower). Still, this step validated that fully local processing is feasible with the right hardware – an attractive option for customers prioritising data privacy.

Conclusion

The experiment clearly demonstrates that tools like dify.ai enable rapid creation of GenAI-based solutions with minimal development effort. Even with limited hardware, the prototype confirmed: integrating a GenAI-powered release note summary generator into an existing release management pipeline would be both practical and valuable. It could significantly improve user experience by tailoring information to specific audiences while reducing the time spent reading through large documents. This idea is worth pursuing in future innovation or customer projects.

Organisations looking to improve release communication or streamline user experience could benefit significantly from similar GenAI-driven approaches.

GenAI supports a variety of individual steps in software engineering. Download and read also our eBook about AI-guided legacy software modernisation.