by David Carmona (ERNI Spain)

When you run a business, what you want from technology is simple: for it to grow with you without breaking down or sending your bills through the roof. Sometimes, choosing the right technology to handle unpredictable peaks, maintain consistent latencies, and control costs can be a real headache. That’s why I invite you to see why Elixir – on the BEAM virtual machine – could become your best tool for solving concurrency problems.

What is Elixir, and how does BEAM affect your bottom line?

Elixir is a modern language that runs on BEAM, the same virtual machine that has allowed telecommunications systems to handle millions of connections with minimal hardware. In business terms, this means you can serve more users with fewer machines, fewer incidents, lower costs, and less stress.

- Language and platform: Elixir (the language) runs on BEAM (the platform). Together, they provide an execution model designed around ultra-lightweight processes and built-in fault tolerance.

- Focus on availability: The design prioritises keeping the system running even if one part fails. This means fewer interruptions and a more robust system.

- Mature ecosystem: OTP (the platform’s libraries) provides proven architectural patterns for critical services, helping to accelerate delivery.

Elixir/BEAM is NOT ‘just another language’; it is a platform built from the ground up for concurrent, distributed, and always-available systems.

How BEAM squeezes every last drop of performance from every core: Real, constant parallelism

Most technology stacks claim to ‘take advantage of multiple cores’, yet bottlenecks often appear in production: blockages, memory pauses, queues…

BEAM addresses this problem from the ground up.

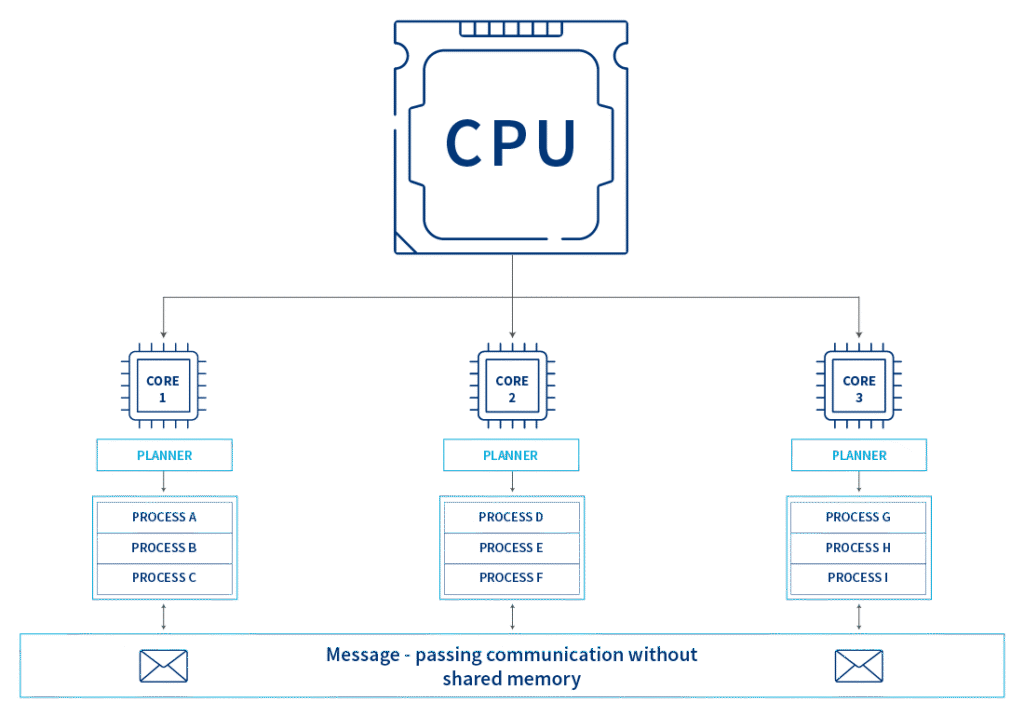

- Planners per core: BEAM runs one planner per CPU core. Each planner manages its own task queue and balances workloads when a queue empties or fills, keeping all cores consistently busy. Best of all, you don’t need to worry about implementation – BEAM handles it natively and automatically.

- Ultra-lightweight processes: In BEAM, a ‘process’ isn’t an operating system process. These are VM-specific actors: they start in microseconds, use very little memory, and a single server can run millions of them.

- No shared memory: Processes don’t share memory; they communicate via messages. By removing locks and global states, contention is reduced, and latency under load becomes predictable.

- Garbage collection by process: Each process manages its own memory, preventing latency spikes during peak times.

From theory to practice

As a practical example, I will refer to a 2016 article by Chris McCord in DockYard (Phoenix Channels vs Rails Action Cable – DockYard) where the team behind the Phoenix framework (based on Elixir) managed to maintain 2 million active WebSocket connections on a single virtual machine. How did they do it?

- Hardware: One Amazon EC2 m4.10xlarge instance (40 cores, 160 GB RAM).

- Software: Elixir + Phoenix Channels.

- Results:

- 2 million active connections.

- Average latency of ~2ms.

- Memory consumption per connection: ~1 KB.

- No crashes, no GC pauses, no queue saturation.

Why is this impressive?

In other stacks, such as Node.js or Ruby on Rails, maintaining thousands of simultaneous connections requires:

- Load balancers.

- Multiple instances.

- Redis for pub/sub.

- Much more hardware.

With Elixir and BEAM, all of this becomes simpler thanks to their native concurrency model. Each connection runs as an isolated process, supervised and automatically distributed across the cores.

For a more detailed comparison, you can download the eBook by clicking the banner at the end of the article, where we explore how Phoenix/Elixir stacks up against other platforms and frameworks.

Where it shines and how to introduce it without overhauling your business

It’s not about replacing everything, but about addressing the right bottlenecks.

What are the ideal use cases?

- Real-time: chats, notifications, live collaboration, streaming.

- High-volume integrations: payment gateways, burst APIs, IoT with millions of devices.

- Low-latency web: Phoenix and LiveView deliver responsive experiences without front-end complexity.

Success doesn’t come from ‘using Elixir for everything’, but from identifying the weakest point of the system where its implementation is clear and delivers the most value to users.

Use cases and real-world experiences

Elixir is increasingly being adopted by companies that need fast, stable responses to millions of simultaneous requests, often migrating only the most critical parts and dramatically reducing infrastructure costs:

- Discord: Migrated large parts of its backend to Elixir, leveraging its ability to handle millions of active connections with low latency and unmatched resilience. (https://elixir-lang.org/blog/2020/10/08/real-time-communication-at-scale-with-elixir-at-discord/)

- Pinterest: Reduced backend servers from 200 to 4 by migrating to Elixir, saving over £2 million annually in infrastructure costs (https://paraxial.io/blog/elixir-savings).

- Bleacher Report: Achieved efficient real-time management of sporting events (https://www.erlang-solutions.com/case-studies/bleacher-report-case-study/).

- Startups and fintech companies: Elixir/Phoenix is increasingly the preferred choice when scalability and low latency are critical (https://curiosum.com/blog/12-startups-using-elixir-language-in-production).

Conclusion

Elixir on BEAM is not an eccentric gamble; it’s a way to achieve what matters most at digital scale – handling peaks, maintaining stable latency, ensuring resilience, and reducing costs – without adding complexity.

If your growth today relies on adding machines, retries and patches, you’re accumulating a ‘concurrency debt’. Switching to an execution platform designed for this purpose not only improves your metrics but also your mornings: fewer surprises and lower costs.